Shoppers Can Now Teach Google What They Like and See More of It

Connecting people with products is a lucrative game, and a tricky one to get right. Even Google, the master of search with an enormous Shopping Graph of retail data, realizes it can’t do it alone. So the company is enlisting the help of users.

On Wednesday, the tech giant revealed a set of updates that allow people to rate styles in their product search results and mark their favorite brands, effectively teaching Google what they like so they can see more of it. Together with its AI-generated shopping images and virtual apparel try-on tool, the company’s commerce objectives are clearly zeroing in on fashion.

More from WWD

Naadam Debuts Limited-edition Capsule and Inks Licensing Deal With Peanuts

EXCLUSIVE: New York Fashion Tech Lab Unveils Its 2024 Cohort of Women-led Retail Tech Start-ups

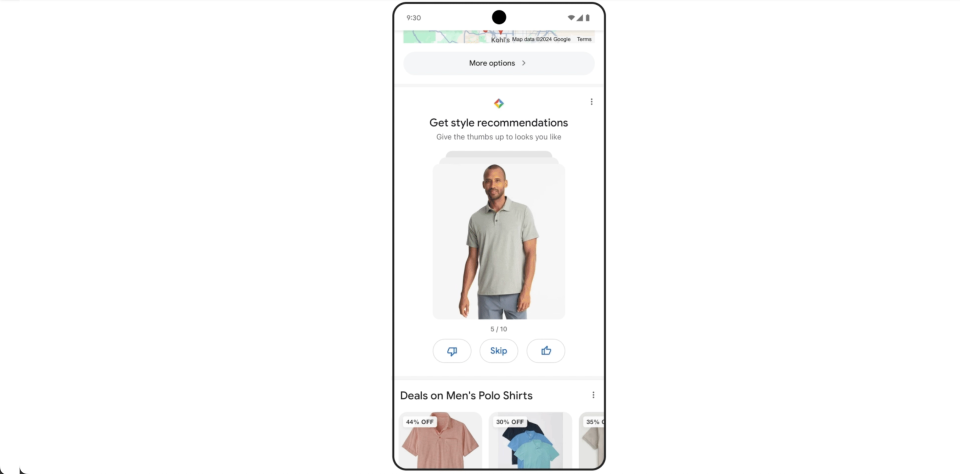

The new “Style Recommendations” feature allows individuals to rate their product search results, according to Sean Scott, vice president and general manager of consumer shopping at Google. Explaining how it works, he said, “when you search for certain apparel, shoes or accessories items — like ‘straw tote bags’ or ‘men’s polo shirts’ — you’ll see a section labeled ‘style recommendations.’ There, you can rate options with a thumbs-up or thumbs-down, or a simple swipe right or left, and instantly see personalized results.”

The format may feel familiar to Stitch Fix users. Its Style Shuffle game uses a similar thumbs-up/thumbs-down rating mechanism, so it can learn tastes or fashion preferences. What’s interesting about these scenarios is that both Google and Stitch Fix boast about their AI and machine learning capabilities, but still take in user feedback to boost the relevancy of their recommendations.

Getting consumers involved in their own preference modeling isn’t a bad idea. If done right, it can elevate transparency, and if people don’t like the feature, Google said, they can shut it off by clicking the three dots next to the “Get style recommendations” section or dig into personalization options in the “About this result” panel.

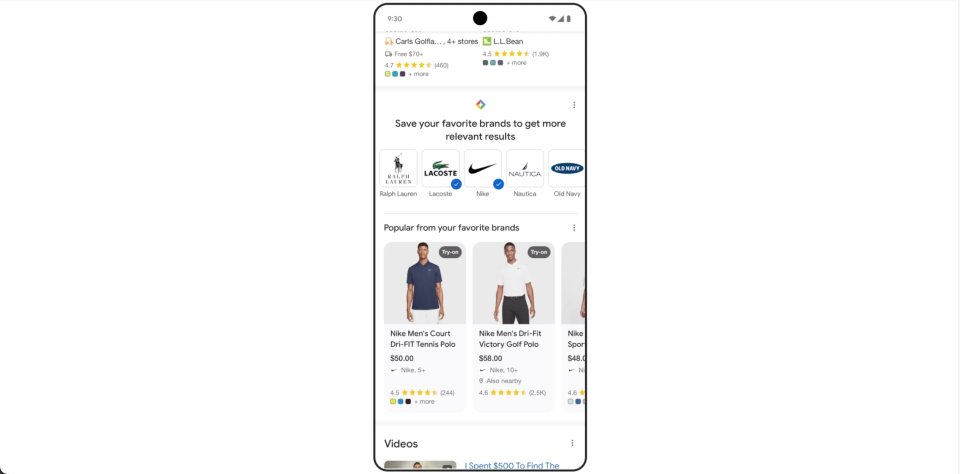

With the ability to mark their favorite brands, people can take an active part in shaping the product results Google dishes up for them.

Like others, Google also seems to understand a pivotal fact about shopping for fashion: It’s an inherently visual task that historically hinged on text descriptions. Using images to search for something visual makes far more sense, and advances in computer vision make that feasible — which is why a growing number of platforms have been investing in that, most recently Samsung in its latest Galaxy smartphone. But this usually requires the user to photograph the item or have an existing image of it.

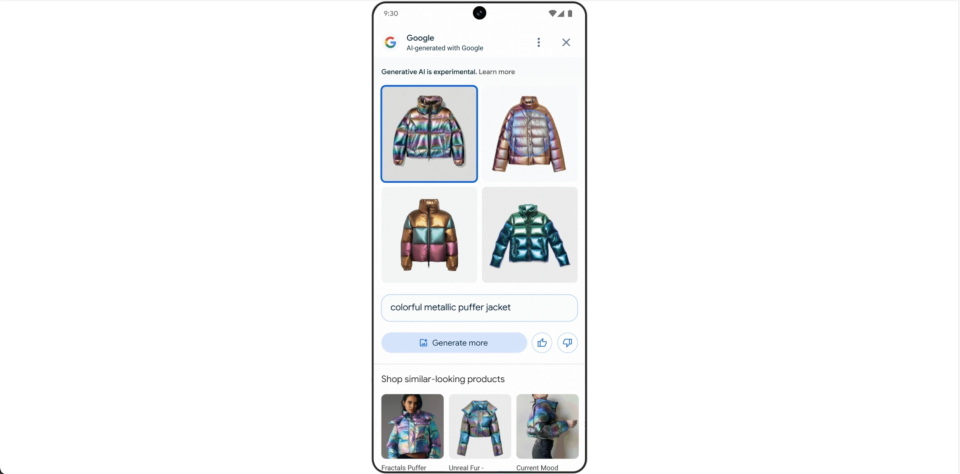

That seems almost quaint now in the age of generative AI. Google seems to think so too, since it created an experimental AI-based visualization tool that can power product searches.

“We developed AI-powered image generation for shopping so you can shop for apparel styles similar to whatever you had in mind,” said Scott. Users simply tell the system what they’re looking for, and the feature creates a realistic image of it and then finds items that best match the picture.

The tool plugs into its Shopping Graph, a data set of product and seller information packed with more than 45 billion listings that are continuously refreshed — to the tune of more than 2 billion listing updates every hour.

It’s not a polished release, but an experimental feature. Anyone in the U.S. can try it out, but they must have opted into Search Generative Experience in Search Labs, Google’s testing bed, to access it in the Google app or mobile browsers.

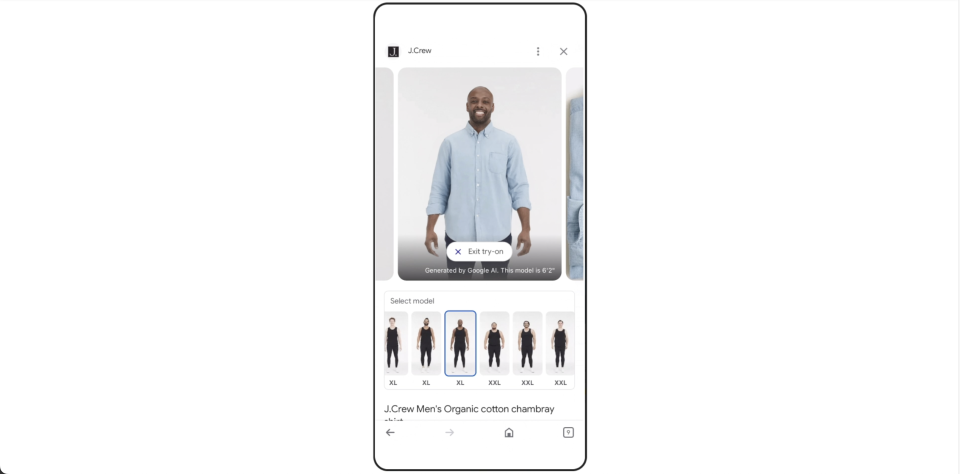

Once people find the look they seek, the next step, according to Google, is to visualize what it might look like when worn. This is where its virtual apparel try-on steps in.

Announced in June 2023, this feature uses AI to digitally place clothes on human models. “Sixty-eight percent of online shoppers agree that it’s hard to know what a clothing item will look like on them before you actually get it, and 42 percent of online shoppers don’t feel represented by the images of the models that they see,” Lillian Rincon, Google’s senior director of product, told WWD at the time.

To remedy that, the company shot a diverse range of people, then used AI — fed by data from its Shopping Graph — to realistically depict how fabrics would crease, cling or drape on different figures.

It doesn’t work for every brand, product or clothing category, at least not yet. For now, U.S. users can find it on desktop, mobile and the Google app by looking for the “try-on” icon in shopping results for men’s or women’s tops and see if it wears well in sizes from XXS-4XL.

Few things can sink a shopping experience like picking through piles of erroneous items, which still happens, even with recent breakthroughs in machine learning. That must be very apparent to Google, which sees people shopping more than a billion times per day. The fact that it aims to solve the issue with a blend of user feedback and AI features suggests that, even as the tech advances, it can’t completely forego the human element.

Best of WWD

Yahoo Lifestyle

Yahoo Lifestyle